Basic Linear Data Structures

Course Overview

- Why Take It: Get ready for coding interviews and seriously level up your core programming skills.

- What You'll Master: Essential data structures (Arrays, Strings, Sets, Hash Maps, Heaps) and core patterns (Two Pointers, Sliding Window, Binary Search, BFS, DFS, Backtracking).

- The Goal: Build your "efficiency intuition" to spot the right pattern and avoid slow, brute-force answers.

Data Structures Deep Dive

Linear Structures

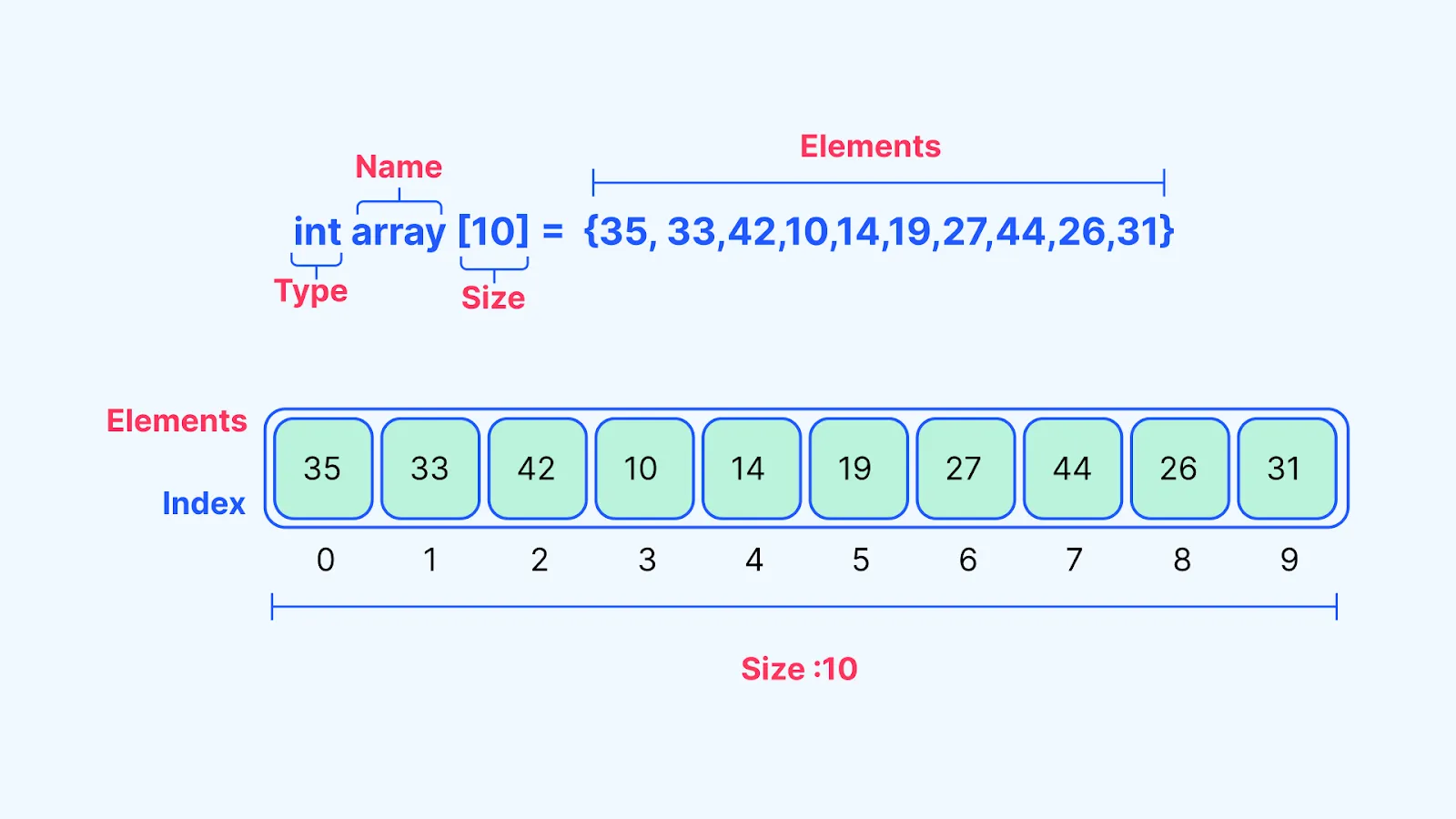

Arrays

- The Default: Arrays are the go-to for linear, ordered data (lists, numbers, etc.).

- The Good Part: Super fast access by index ($O(1)$ time) because they're stored in contiguous memory.

- The Bad Part: Inserting or deleting in the middle or front is slow ($O(N)$ time) because you have to shift everything.

-

Interview Tip: You'll use them constantly for traversal, indexing, comparing ends, and setting up things like Sliding Windows. Don't underestimate them!

Strings

- Basically Arrays: They're just arrays of characters.

- The Big Catch (Immutability): In most languages, changing a string creates a new one.

- Efficiency Warning: Concatenating strings in a loop (

result = result + char) is a beginner's mistake—it can be $O(N^2)$! Instead, build a list of characters and then join it at the end ($O(N)$). -

Interview Tip: String problems are often secretly Two-Pointer or Sliding Window problems, not brute-force checks.

Sets

- What They Are: A collection of unique values (no duplicates), with no specific order.

- The Superpower: Checking if a value exists is lightning fast—constant time ($O(1)$) on average.

- When to Use Them:

- Uniqueness (finding duplicates).

- Existence (checking if you've seen something before).

- Fast membership checks.

- Not for: Storing key-value pairs (use a Hash Map for that).

Hash Maps (A.K.A. Dictionaries)

- What They Are: Key-value storage. Think of them as arrays where you use custom keys (like strings) instead of numbered indices.

- The Superpower: Insanely fast look up and insertion ($O(1)$ on average). They use a "hash function" to find the data instantly.

- The Key Rule: Keys must be hashable (e.g., numbers, strings, tuples). Lists and other Hash Maps are usually not hashable.

- The Mindset Shift: Instead of brute-forcing by asking the same question over and over, you use a Hash Map to remember the answer as you go. This is how you often jump from an $O(N^2)$ solution to a super-fast $O(N)$ solution.

- Common Use: Creating a Frequency Map to count how often elements appear.

- Example Problem: The Two Sum problem is a classic Hash Map win. You calculate the complement needed and check if you've already stored it in the map.

Efficiency: Big O Notation

- What it is: A way to measure how a program slows down as the input gets bigger.

The Common Speeds (from fastest to slowest):

| Notation | Name | Description |

|---|---|---|

| $O(1)$ | Constant Time | Time never changes, no matter the input size (e.g., accessing an array index, checking a Set). |

| $O(\log N)$ | Logarithmic Time | Cuts the problem in half each time (e.g., Binary Search). Super fast. |

| $O(N)$ | Linear Time | Goes through each item once (e.g., a single loop, traversing a list). This is usually great. |

| $O(N \log N)$ | Linearithmic Time | Almost always related to sorting. |

| $O(N^2)$ | Quadratic Time | Nested loops (a loop inside a loop). This is usually too slow for large inputs and is a common sign of a brute-force approach. |

Rule of Thumb for Interviews:

- For large inputs (up to $10^5$), aim for $O(N \log N)$ or better.

- For smaller inputs (up to $10^4$), $O(N^2)$ might be okay, but you should usually optimize.

Core Algorithmic Patterns

Two Pointers

What it is: Using two indices (or pointers) to move through a linear structure (arrays, strings) to avoid nested loops.

1. Same Direction (Fast & Slow Pointers)

- The Idea: Both pointers move forward, but one (the "Fast" pointer) moves 2 steps for every 1 step the other (the "Slow" pointer) takes.

- Best Used For:

- Finding the Middle: When Fast hits the end, Slow is exactly in the middle.

- Detecting Cycles: If Fast laps or meets Slow, you've found a cycle.

- Classic Example: Finding the middle node of a Linked List in a single pass.

2. Opposite Direction (Inward Pointers)

- The Idea: One pointer starts at the beginning (Left) and the other at the end (Right); they move inward toward the center.

- Best Used For:

- Symmetry Checks: Comparing symmetric parts.

- Sorted Arrays: Finding a pair/combination (moving a pointer inward eliminates half the possibilities).

- Avoiding Nested Loops: Tracks relationships between two points without $O(N^2)$ comparisons.

- Classic Example: Checking for a Valid Palindrome.

Sliding Window

Core Idea: Instead of just tracking two positions, you manage an entire range of values called the "window" to inspect segments without re-scanning data. It reduces time complexity to $O(N)$.

1. Fixed Size Sliding Window

- Use Case: "Find the maximum average of any subarray of size K."

- Mechanism: The window's length never changes. As you slide, you add one new element from the right and remove one old element from the left.

2. Dynamic Size Sliding Window

- Use Case: "Find the length of the longest substring with at most K unique characters."

- Mechanism: The window grows and shrinks dynamically.

- Expand: Grow one element at a time from the right.

- Shrink (Validation): If the window becomes invalid (e.g., hit a repeating char), remove elements from the left until valid again.

Binary Search

- The Big Idea: Cut the problem space in half repeatedly (logarithmic time, $O(\log N)$).

- Vanilla Version: Finding a target value in a sorted array.

- The Advanced Power (Monotonic Condition): You don't actually need a sorted array—you just need a monotonic condition. This is a rule that only changes once (e.g., False $\rightarrow$ True, never flips back).

- The Feasibility Function: Replace value comparison with a "yes/no" question: "Does this index/value satisfy my condition?"

- Example 1: Finding the first

Truein an array ofFalse, False, True, True... - Example 2: Finding the minimum in a Rotated Sorted Array.

- Example 1: Finding the first